Let's Make a Minor League Baseball Database!

A step-by-step tutorial to make your own Minor League Baseball Database using rvest.

Baseball is a game of numbers, some more accessible than others. Rates, averages, linear weights, aging curves, and WAR are only a few of the myriad statistics influencing decision makers in MLB franchises. An endless amount of data is accessible to the average fan at many sites, most notably the Lahman Baseball Database, which is the most robust catalog of MLB player statistics available to the public.

One area of relatively limited accessibility to the average fan is statistics for Minor League players. Though player statistics by position, year, and team are available at many sites, a thorough catalog of MiLB data akin to the Lahman database is unavailable. I wonder who Kris Bryant would be most similar to?

What do you mean Baseball Reference doesn’t calculate similarity scores for minor league players?

As If ButtonLet's make our own damn database!

To make our database we’re going to use rvest, an R package designed by Hadley Wickham at RStudio [^1]. The package scrapes HTML from webpages and extracts it into readable data. Let’s load the necessary packages and go from there:

#if you haven't done so already, install rvest from Wickham's github repository

# install.packages("devtools")

# install_github("hadley/rvest")

c('rvest','dplyr') -> packages #installs packages

lapply(packages, library, character.only = T)The function below will construct each team’s minor league website, for every desired year, and pull out the same table every time.

# prep scraper

url <- "http://www.baseball-reference.com/minors/"

teams=c("ARI")

stats_table <- '#team_batting.sortable.stats_table'

stats_id <- paste0(stats_table,' a')Let’s start with the Arizona Diamondbacks batting statistics from 2012-2014. We’ll call the data frame we’re about to pull the variable “minors_batting_ARI”. We’re reconstructing the url http://www.baseball-reference.com/minors/affiliate.cgi?id=ARI&year=2014 and instructing the scraper to pull the necessary data table and then repeat the process for next season. We’re calling the pulled data table ‘df’ for simplicity.

minors_batting_ARI <- data.frame()

# for loop by teams and seasonfor (teams in teams){

for (season in 2014:2012) {

html <- paste(url,"affiliate.cgi?id=",teams,"&year=",season,sep="")

# batting stats

read_html(html) %>%

html_nodes(stats_table) %>%

html_table(header = T) %>%

data.frame() %>%

tbl_df() -> dfSo far our code will scrape the batting table from the team’s minor league page, but we also need to extract each player’s Minor League baseball-reference id using it’s href. Isn’t that right Chris Young? No. Not you, Chris Young. The other, lankier Chris Young. We’re good man, no need to get angry.

This portion of code extracts the attributes of the URL in the table and changes them into characters:

# player info

read_html(html) %>%

html_nodes(stats_id) %>%

html_attr(name="href") %>% unlist %>% as.character -> min_playeridUsing R formatting code we delete unnecessary rows and create a column called bref_player_id to assign each player’s unique reference id. We’re trimming out characters from the href attributes we don’t need, leaving only the reference ids.

# clean dataframe and add team and season info

df <- df[1:nrow(df),]

df <- df[!na.omit(df$Rk=='Rk'),]

df$season <- c(season)

df$teams <- c(teams)

# remove url data

min_playerid=gsub("/register/player.cgi?id=", "", min_playerid,fixed = TRUE)

df$min_playerid <- c(min_playerid)

# bind to dataframe

minors_batting_ARI <- rbind(minors_batting_ARI,df)

}

}#to view the dataset and save it as a .csv in our working directory

View(minors_batting_ARI)

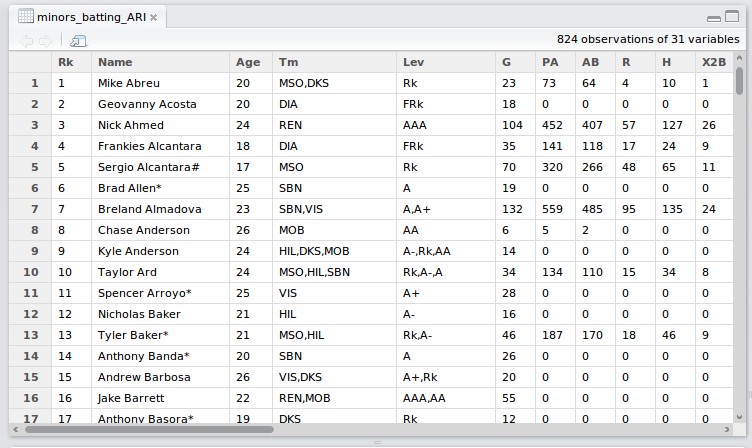

write.csv(minors_batting_ARI, "minors_batting_ARI.csv")There we are! Arizona’s minor league batting stats from 2012-2014! 824 instances may seem like too much, for only a three year period, but Baseball-reference.com doesn’t aggregate a player’s statistics by year; they do it by level. Therefore, some players may have multiple rows for the same season (aren’t you glad we pulled out the reference id!)

… let’s take a left turn on Grind Parkway and pull in all MiLB batting statistics for 2014.

MiLB Batting Stats for All Teams

First we’ll need a list of baseball-reference’s team codes. I’ll do the dirty work and include franchise codes for each team since 1969 if you want to play with that data 2.

teams=c("ARI","ATL","BAL","BOS","CHC","CHW","CIN","CLE","COL","DET","HOU","KCR","ANA","LAD","FLA","MIL","MIN","NYM","NYY","OAK","PHI","PIT","SDP","SFG","SEA","STL","TBD","TEX","TOR","WSN")url <- "http://www.baseball-reference.com/minors/"

teams=c("ARI","ATL","BAL","BOS","CHC","CHW","CIN","CLE","COL","DET","HOU","KCR","ANA","LAD","FLA","MIL","MIN","NYM","NYY","OAK","PHI","PIT","SDP","SFG","SEA","STL","TBD","TEX","TOR","WSN")

stats_table <- '#team_batting.sortable.stats_table'

stats_id <- paste0(stats_table,' a')

minors_batting <- data.frame()

# for loop by teams and season

for (teams in teams){

for (season in 2014:2014) {

html <- paste(url,"affiliate.cgi?id=",teams,"&year=",season,sep="")

# batting stats

read_html(html) %>%

html_nodes(stats_table) %>%

html_table(header = T) %>%

data.frame() %>%

tbl_df() -> df

# player info

read_html(html) %>%

html_nodes(stats_id) %>%

html_attr(name="href") %>% unlist %>% as.character -> min_playerid

# clean dataframe and add team and season info

df <- df[1:nrow(df),]

df <- df[!na.omit(df$Rk=='Rk'),]

df$season <- c(season)

df$teams <- c(teams)

# remove url data

min_playerid=gsub("/register/player.cgi?id=", "", min_playerid,fixed = TRUE)

df$min_playerid <- c(min_playerid)

# bind to

minors_batting <- rbind(minors_batting,df)

}

}

To query other seasons or teams, simply change the information selected. For example:

teams=c("OAK","TBD")

stats_table <- '#team_batting.sortable.stats_table'

stats_id <- paste0(stats_table,' a')

minors_batting <- data.frame()

for (teams in teams){ for (season in 2010:2005) {Will pull in minor league batting statistics for Oakland and Tampa Bay for the years 2005-2010.

Lets clean up all of the stray objects besides the minors_batting dataframe in our working environment before moving on:

rm(list=setdiff(ls(), "minors_batting"))Obligatory heads up!

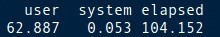

This code is querying 30 distinct URLs for every season, so multi-season outputs can take some time. Here are my system.time indicators for the above function:

The query takes about a 1:45 for every league-wide season pull. Pro-Tip! Get that query going and have some breakfast/lunch/dinner.

Coming up...

Stay tuned to the site for another post about similarity scores and to learn more about Kris Bryant’s best comparisons.

Update (10/3/2017)

Baseball Reference has made slight alterations to their website that has deprecated the code above as originally published. An updated version of the scraper code can be found at my MiLB Scraper on github. Feel free to fork my repository to propose changes. Edit My Code